In this issue, we cover the top ML Papers of the Week (Feb 13 - Feb 19).

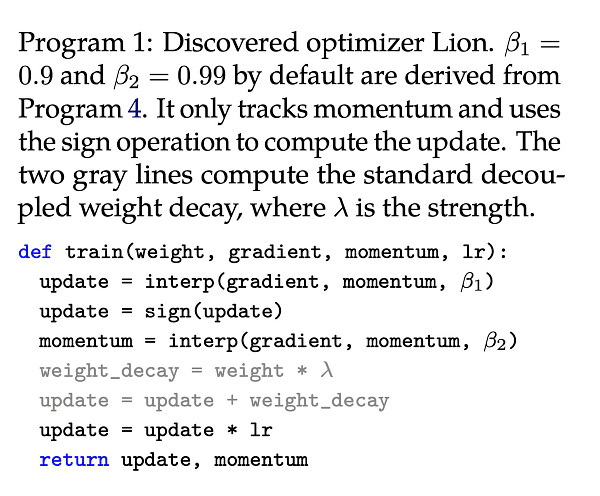

1). Lion (EvoLved Sign Momentum) - a simple and effective optimization algorithm that’s more memory-efficient than Adam. (paper)

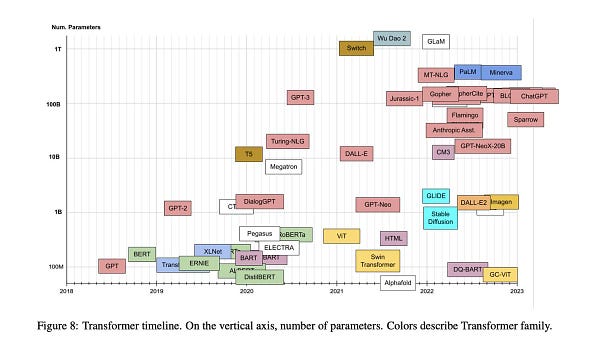

2). Transformer models: an introduction and catalog. (paper)

3). pix2pix3D - a 3D-aware conditional generative model extended with neural radiance fields for controllable photorealistic image synthesis. (paper)

4). Moral Self-Correction in Large Language Models - finds strong evidence that language models trained with RLHF have the capacity for moral self-correction. The capability emerges at 22B model parameters and typically improves with scale. (paper)

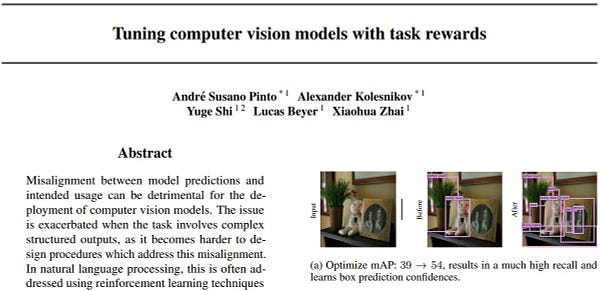

5). Vision meets RL - uses reinforcement learning to align computer vision models with task rewards; observes large performance boost across multiple CV tasks such as object detection and colorization. (paper)

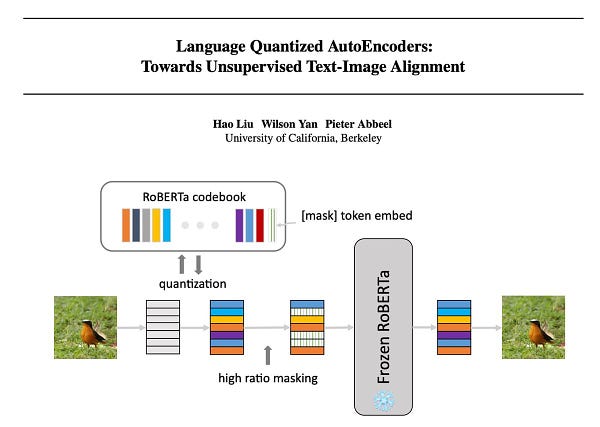

6). Language Quantized AutoEncoders (LQAE) - an unsupervised method for text-image alignment that leverages pretrained language models; it enables few-shot image classification with LLMs. (paper)

7). Augmented Language Models - a survey of language models that are augmented with reasoning skills and the capability to use tools. (paper)

8). Geometric Clifford Algebra Networks (GCANs) - an approach to incorporate geometry-guided transformations into neural networks using geometric algebra. (paper)

9) Auditing large language models - proposes a policy framework for auditing LLMs. (paper)

10). Energy Transformer - a transformer architecture that replaces the sequence of feedforward transformer blocks with a single large Associate Memory model; this follows the popularity that Hopfield Networks have gained in the field of ML. (paper)

See you next week for another round of awesome ML papers!